[ad_1]

Generative AI has already modified the world, however not all that glitters is gold. Whereas client curiosity within the likes of ChatGPT is excessive, there’s a rising concern amongst each specialists and customers concerning the risks of AI to society. Worries round job loss, knowledge safety, misinformation, and discrimination are a few of the predominant areas inflicting alarm.

AI is the fastest-growing concern within the US, up 26% from solely 1 / 4 in the past.

AI will little question change the way in which we work, however firms want to pay attention to the problems that include it. On this weblog, we’ll discover client worries round job and knowledge safety, how manufacturers can alleviate issues, and shield each themselves and customers from potential dangers.

1. Safeguarding generative AI

Generative AI content material, like ChatGPT and picture creator DALL-E, is shortly turning into a part of day by day life, with over half of customers seeing AI-generated content material no less than weekly. As these instruments require large quantities of information to study and generate responses, delicate info can sneak into the combo.

With many transferring elements, generative AI platforms let customers contribute code in numerous methods in hopes to enhance processes and efficiency. The draw back is that with many contributing, vulnerabilities typically go unnoticed, and private info could be uncovered. This actual state of affairs is what occurred to ChatGPT in early Might 2023.

With over half of customers saying knowledge breaches would trigger them to boycott a model, knowledge privateness must be prioritized. Whereas steps are being made to put in writing legal guidelines on AI, within the meantime, manufacturers have to self-impose transparency guidelines and utilization pointers, and make these recognized to the general public.

2 in 3 customers need firms that create AI instruments to be clear about how they’re being developed.

Doing so can construct model belief, an particularly coveted forex proper now. Aside from high quality, knowledge safety is an important issue on the subject of trusting manufacturers. With model loyalty more and more fragile, manufacturers have to reassure customers their knowledge is in protected arms.

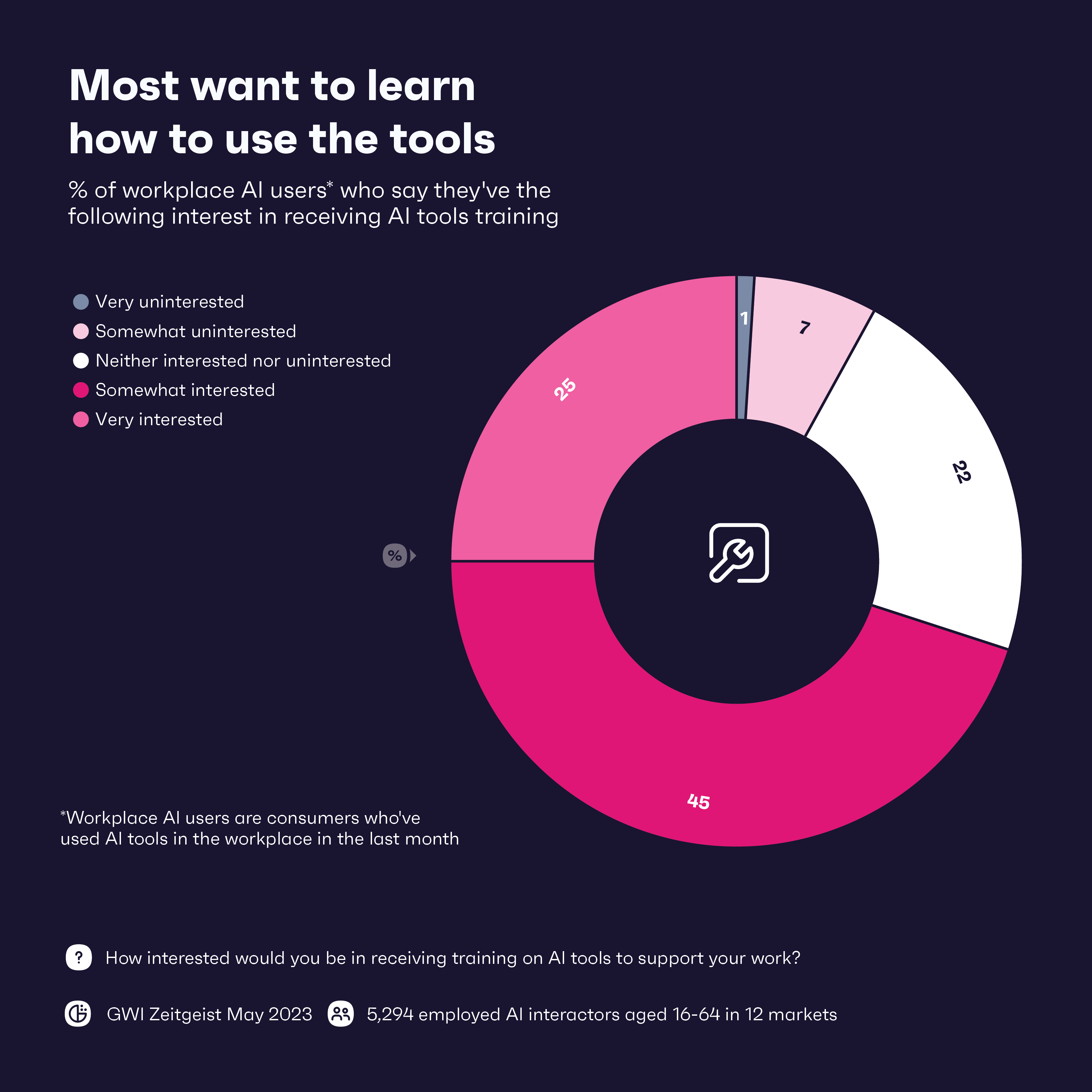

So what’s among the finest methods to go about securing knowledge within the age of AI? The primary line of protection is coaching employees in AI instruments, with 71% of employees saying they’d be considering coaching. Combining this with data-protection coaching is equally necessary. Training is de facto key right here – arming employees with the information wanted to make sure knowledge privateness is front-of-mind will go a great distance.

2. Protecting it actual in a pretend information world

Fb took 4.5 years to achieve 100 million customers. By comparability, ChatGPT took simply over two months to achieve that milestone.

As spectacular as generative AI’s rise is, it’s been a magnet for pretend information creation within the type of audios and movies, generally known as deepfakes. The tech has already been used to unfold misinformation worldwide. Solely 29% of customers are assured of their skill to inform AI-generated content material and “actual” content material aside, which is able to seemingly worsen as deepfakes get extra subtle.

Practically two-thirds of ChatGPT customers say they work together with the instrument like they’d an actual individual, which reveals how doubtlessly persuasive the instrument may very well be.

However customers have seen this coming; 64% say they’re involved that AI instruments can be utilized for unethical functions. With this concern, and low confidence in detecting deepfakes, it’s manufacturers that may make a distinction in defending customers from this newest wave of pretend information and offering training on the way to determine such content material.

Manufacturers can begin by implementing supply verification and conducting due diligence on any info they need to share or promote. In the identical vein, they will associate or use in-house fact-checking processes on any information tales they could obtain. For many manufacturers, these measures will seemingly already be in place, as pretend information and misinformation have been rampant for years.

However as deepfakes get smarter, manufacturers might want to keep on prime of it. To beat them, manufacturers might have to show to AI as soon as extra within the type of AI-based detection instruments that may determine and flag AI-generated content material. These instruments will grow to be a necessity within the age of AI, nevertheless it will not be sufficient as dangerous actors are normally a step forward. However, a mixture of detection instruments and human oversight to appropriately interpret context and credibility may thwart the worst of it.

Transparency can also be key. Letting customers know you’re doing one thing to deal with AI-generated pretend information can rating belief factors with them, and assist to set trade requirements that may assist everybody keep in step in opposition to deepfakes.

3. Combatting inherent biases

Nobody desires a PR nightmare, however that’s an actual chance if manufacturers aren’t double triple checking the knowledge they get from their AI instruments. Bear in mind, AI instruments study from knowledge scraped from the web – knowledge that is stuffed with human biases, errors, and discrimination.

To keep away from this, manufacturers needs to be utilizing various and consultant datasets to coach AI fashions. Whereas utterly eliminating bias and discrimination is close to unattainable, utilizing a variety of datasets can assist weed a few of it out. Extra customers are involved with how AI instruments are being developed than not, and a few transparency on the subject may make them belief these instruments a bit extra.

Whereas all manufacturers ought to care about utilizing unbiased knowledge, sure industries should be extra cautious than others. Banking and healthcare manufacturers particularly must be hyper-aware of how they’re utilizing AI, as these industries have a historical past of systemic discrimination. In accordance with our knowledge, habits that causes hurt to particular communities is the highest purpose customers would boycott a model, and inside the terabytes of information used to coach AI instruments lies doubtlessly dangerous knowledge.

Along with an in depth evaluate of datasets getting used, manufacturers additionally want people, ideally ones with various, fairness, and inclusion (DE&I) coaching, to supervise the entire course of. In accordance with our GWI USA Plus dataset, DE&I is necessary to 70% of People, they usually’re extra seemingly to purchase from manufacturers that share their values.

4. Putting the best steadiness with automation within the office

Let’s deal with the elephant within the room. Will AI be a pal or foe to employees? There’s little question it’ll change work as we all know it however how massive AI’s impression on the office might be depends upon who you ask.

What we do know is that a big majority of employees count on AI to have some form of impression on their job. Automation of enormous elements of worker roles is anticipated, particularly within the tech and manufacturing/logistics industries. On the entire, employees appear enthusiastic about AI, with 8 of 12 sectors saying automation can have a optimistic impression.

On the flip facet, practically 25% of employees see AI as a menace to jobs, and people who work within the journey and well being & magnificence industries are notably nervous. Generative AI appears to be bettering exponentially each month, so the query is: If AI can maintain mundane duties now, what comes subsequent?

Even when AI does take some 80 million jobs globally, employees can discover methods to make use of AI successfully to boost their very own expertise, even in weak industries. Customer support is ready to endure main upgrades with AI, however it could possibly’t occur with out people. Generative AI can take care of most inquiries, however people must be there to deal with delicate info and supply an empathetic contact. People also can work with AI to supply extra customized options and proposals, which is particularly necessary within the journey and sweetness industries.

AI automating some duties can unlock employees to contribute in different methods. They will dedicate further time to strategic pondering and developing with revolutionary options, presumably leading to new services. It is going to be totally different for each firm and trade, however those that are in a position to strike the best steadiness between AI and human employees ought to thrive within the age of AI.

The ultimate immediate: What you want to know

AI could be highly effective, however manufacturers want to pay attention to the dangers. They’ll want to guard client knowledge and pay attention to pretend information. Transparency might be key. Shoppers are nervous round the way forward for AI, and types displaying them that they’re behaving ethically and responsibly will go a great distance.

The tech is thrilling, and can seemingly have a optimistic impression on the office total. However manufacturers ought to proceed with warning, and attempt to strike the best steadiness between tech and human capital. Staff will want in depth coaching on ethics, safety, and proper software, and doing so will elevate their expertise. By integrating AI instruments to work alongside individuals, versus outright changing them, manufacturers can strike a steadiness which is able to set them up for the AI-enhanced future.

[ad_2]

Source link